Who has the fastest F1 website in 2021? Part 6

This is part 6 in a multi-part series looking at the loading performance of F1 websites. Not interested in F1? It shouldn't matter. This is just a performance review of 10 recently-built/updated sites that have broadly the same goal, but are built by different teams, and have different performance issues.

- Part 1: Methodology & Alpha Tauri

- Part 2: Alfa Romeo

- Part 3: Red Bull

- Part 4: Williams

- Part 5: Aston Martin

- ➡️ Part 6: Ferrari

- Part 7: Haas

- Part 8: McLaren

- Bonus: Google I/O

- …more coming soon…

Ferrari

- Link

- First run

-

38.9s (raw results)

- Second run

-

13.9s (raw results)

- Total

-

52.8s

- 2019 total

The Ferrari site is my stand-out memory of the 2019 article. Their site was slow because of a 1.8MB blocking script, but 1.7MB of that was an inlined 2300x2300 PNG of a horse that was only ever displayed at 20x20. Unfortunately their 2021 site is slower, and this time it isn't down to one horse.

Possible improvements

- 25 second delay to content render caused by manually-blocking JavaScript. Covered in detail below.

- 3 second delay to content render caused by unused CSS. Covered in detail below.

- 5 second delay to content render caused by other blocking script. Covered in detail below.

- 5 second delay to main image caused by render-blocking CSS & script. Important images should be part of the source, or preloaded.

- Additional 2 second delay to main image caused by an extra connection. This problem is covered in part 1, but the solution here is just to move the images to the same server as the page.

- 50+ second delay to content-blocking cookie modal caused by… a number of things, covered in part 1.

As always, some of these delays overlap.

Key issue: Manually-blocking JavaScript

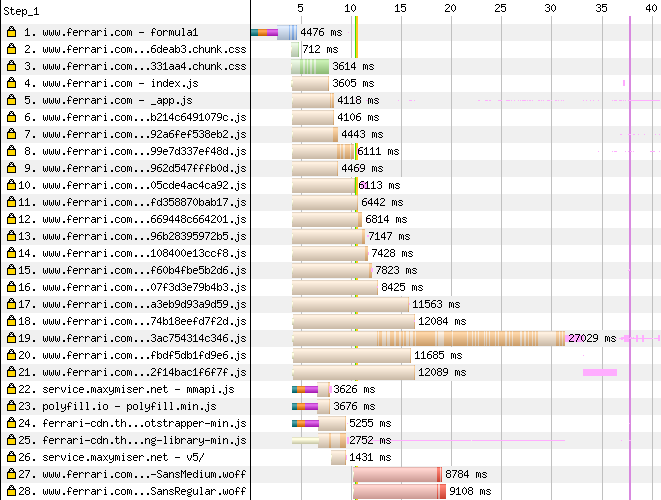

Here's the top of the waterfall:

Rows 4-26 are all JavaScript, which is a bit of a bad smell, but JavaScript doesn't always mean 'bad', but row 19 is particularly worrying due to its size.

The green vertical line around the 11s mark is the first render, but you can see from the video above it's just a black screen. This is what I mean by 'manually-blocking' – the additional script isn't blocking the browser's parser or renderer, but the developers have chosen to show a black overlay until that script has loaded.

We already saw this in part 2, but in that case it was a small late-loading script. Here, the Ferrari folks have used <link rel="preload"> to solve the priority problem, but the remaining problem is the sheer size of the script.

The script is 1.2MB gzipped, which is a significant download cost, but once it's unzipped it becomes 6MB of JavaScript for the device to parse, and that has an additional cost:

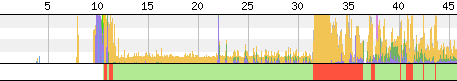

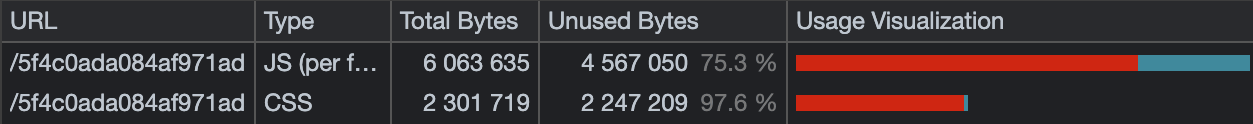

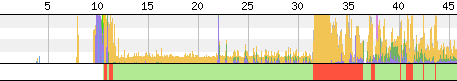

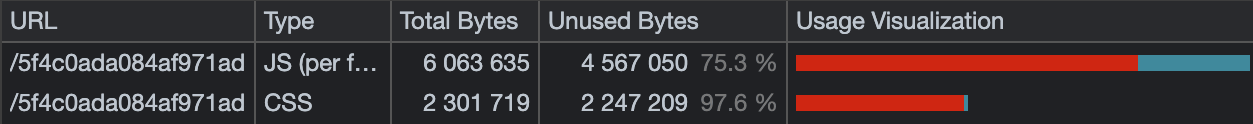

The bottom of the waterfall shows us the CPU usage on the device, and once that massive script lands, we get a bit of a car crash around the 32s mark. This will lock up anything that depends on the main thread for around 5 seconds. If we take a look at the coverage in Chrome DevTools:

The first row is the script, and it shows us 75% of that parsing effort is for nothing. I'd also question needing over a megabyte of JavaScript for a page like this, but I haven't dug into it.

Unfortunately there isn't a quick win here. They need to stop manually blocking content rendering, and split that massive script up. We looked at code splitting over at tooling.report. Ideally every independently functioning piece of JavaScript should be its own entry point, so the browser is only loading what the page need. That would massively cut down the download and parse time.

If I load the page without JavaScript and remove that black render-blocking overlay, there's something resembling a before-JavaScript render there, it just needs some work to make it representative of the final JavaScript-enhanced content (as in, no layout shifts as elements are enhanced), and then they wouldn't need that render-blocking overlay. I think that was the goal at some point, but I guess it didn't pan out.

Key issue: Render-blocking JavaScript

Back to the waterfall:

Rows 22-25 are traditional render-blocking scripts in the <head>. They're all on different servers, so they pay the cost of additional connections as I covered in part 1.

22, 24, and 25 appear to be trackers, which should use the defer or async attribute so they don't block rendering. The polyfill script on row 23 should use defer too, but in this case it's just an empty script since the browser doesn't need any polyfills. They could avoid this pointless request by using the nomodule trick we saw on the Aston Martin site.

Key issue: Unused render-blocking CSS

Back to the waterfall:

The CSS request on row 3 looked a little big to me, and yes, it's 200kB, which unzips to around 2.1MB. It isn't as bad as the JavaScript, but it still shows up in the CPU usage:

It causes around a second of delay around the 10 second mark. Looking at the coverage:

…almost all of that CSS is unnecessary.

Again, there isn't a quick fix here. That CSS just needs splitting up, so pages request only the CSS they need. A lot of web performance folks recommend inlining the CSS for a given page in a <style> in the <head>. "But what about caching??" is usually my response, but the more I see issues like this, the more I think they're right. A per-page targeted approach would avoid ending up with massive chunks of unused CSS like this.

Issue: Image optimisation

Y'know what? This might be the best site so far in this series in terms of image compression. Taking the main image:

Sure, AVIF gets you to 20% of the size, but we're not talking huge savings here in real terms. As usual, the AVIF is a lot smoother, but since this image sits behind text I felt I could go a little lower on the quality slider than I usually would.

Some images could be significantly smaller though:

A lot of the images on the Ferrari site aren't optimised for 2x screens. I don't think that matters so much for the previous image, since it's behind text, but the one above could do with some sharpness. The original image above is 480px wide, but the optimised versions are 720px (taken from a larger source), so in this case the optimised versions are also higher resolution.

How fast could it be?

Here's how fast it looks with the first-render unblocked and redundant CSS removed:

- Original

- Optimised

Like I said, it isn't an easy fix in this case, but the potential savings are huge.

Scoreboard

| Score | vs 2019 | |||

|---|---|---|---|---|

Red Bull | 8.6 | -7.2 | Leader | |

Aston Martin | 8.9 | -75.3 | +0.3 | |

Williams | 11.1 | -3.0 | +2.5 | |

Alpha Tauri | 22.1 | +9.3 | +13.5 | |

Alfa Romeo | 23.4 | +3.3 | +14.8 | |

Ferrari | 52.8 | +6.7 | +44.2 |

Unfortunately it's bottom-of-the-table for Ferrari, by some margin, but will they stay there?

- Part 1: Methodology & Alpha Tauri

- Part 2: Alfa Romeo

- Part 3: Red Bull

- Part 4: Williams

- Part 5: Aston Martin

- ➡️ Part 6: Ferrari

- Part 7: Haas

- Part 8: McLaren

- Bonus: Google I/O

- …more coming soon…