Who has the fastest F1 website in 2021? Part 7

This is part 7 in a multi-part series looking at the loading performance of F1 websites. Not interested in F1? It shouldn't matter. This is just a performance review of 10 recently-built/updated sites that have broadly the same goal, but are built by different teams, and have different performance issues.

- Part 1: Methodology & Alpha Tauri

- Part 2: Alfa Romeo

- Part 3: Red Bull

- Part 4: Williams

- Part 5: Aston Martin

- Part 6: Ferrari

- ➡️ Part 7: Haas

- Part 8: McLaren

- Bonus: Google I/O

- …more coming soon…

Haas

- Link

- First run

-

21.1s (raw results)

- Second run

-

7.1s (raw results)

- Total

-

28.2s

- 2019 total

Haas had the fastest F1 site in 2019, but things aren't looking so good this year. They get to their first render in around 9 seconds, but everything shifts around so much that it isn't really usable until 21.1s.

Possible improvements

- 5+ second delay to content render caused by a CSS font tracker. This is exactly the same issue covered in part 1.

- 3 second delay to content render caused by CSS on other servers, plus a redirect. This problem is covered in part 4, and the solution here is to move the CSS to the same server as the page, or load them async.

- 3 second delay to content render caused by blocking JS on another server. This problem is covered in part 1, and the solution is to use

deferorasyncso the script doesn't block rendering. - 10+ second delay to main image caused by JavaScript. Covered in detail below.

- 10+ seconds of layout instability caused by JavaScript. Covered in detail below.

Lots of little resources vs one big resource

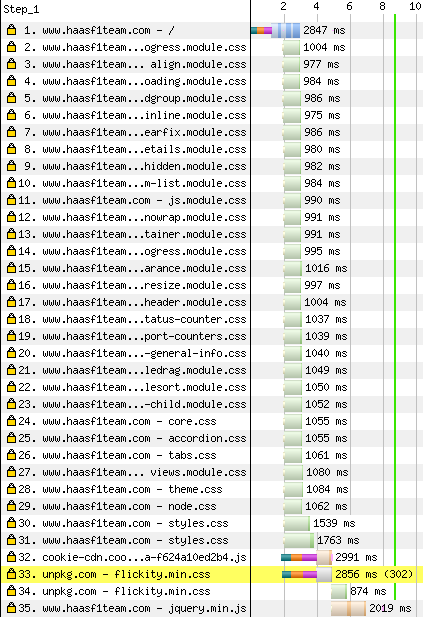

Here's the start of the waterfall:

That's a lot of CSS resources! But is it a problem? In HTTP/1 these would be downloaded in groups of 6, and that reduction in parallel request-making would have hurt performance significantly. But, looking at the waterfall above, everything seems to be in-parallel thanks to HTTP/2.

So, what's the overhead compared to one CSS file? A big part of gzip and brotli compression involves back-references, eg "the next 50 bytes are the same as this bit earlier in the resource". That can only happen in a single resource, so compressing lots of small resources is less efficient than compressing one combined resource. There's also the metadata that comes with each request & response, such as headers. Although, HTTP/2 can back-reference these to other requests & responses in the same connection using HPACK header compression.

Here's the size difference, including brotli compression and headers:

- 32 CSS resources

-

48.2 kB

- 1 combined CSS resource

-

37.7 kB

So we're talking about a 28% overhead in terms of size, but that's only 10.5 kB. But are there other delays, unrelated to size? I passed the site through my optimiser, so nothing was blocking rendering apart from the CSS, and generated three versions of the page:

- One with 32 CSS resources

- One with one combined CSS resource

- One with the CSS inlined as a

<style>in the<head>

…and I put each through WebPageTest 9 times, and looked at how long it took them to get to first-render:

Combining the CSS into one resource saves around half a second…ish. There are a few outliers taking longer than 4 seconds – in those cases here's what happened:

In those outlying cases the primary image jumped ahead and took bandwidth away from the CSS. The impact of this could be reduced by optimising the image (it's around 10x too big), but other than that it might need some tweaking in the markup or on the server.

With inlining you don't get any of these priority issues, and it's around a second faster. Also, if you decide to inline your CSS, you can ensure you're sending exactly what the page needs, and nothing more (although I didn't do that for this test).

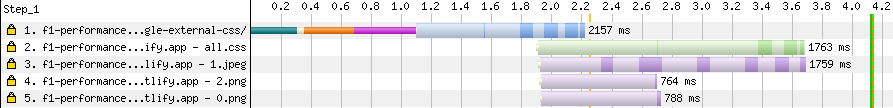

Key issue: Layout shifts as interactivity lands

The fully-loaded page has a carousel at the top, but what you see between 9-14s is a dump of all the links in the carousel. This is a reasonable no-JavaScript experience, but it isn't a great before-JavaScript experience.

"No-JavaScript" users are users that will not run your JavaScript. They may exist because:

- They have disabled JavaScript.

- The JavaScript failed to load due to network reasons.

- The JavaScript didn't execute due to an error (either a mistake, or lack of support in their browser), or script was avoided due to a negative feature-detect.

You might decide that you don't have enough of these users to care about, and that's reasonable if you have good evidence. Or, you might use something like the nomodule trick we saw on the Aston Martin site which creates a lot of these users, so it's definitely worth caring about in that case.

"Before-JavaScript" users are waiting for your JavaScript to download. There's some grey area here, because a script which takes infinite time to download can look like it's in the before-JavaScript state, but it's more like no-JavaScript.

We need to design for these states with actual users in mind, and it gets a lot easier if we can use the same design for both.

The Haas carousel has a reasonable no-JavaScript experience because it provides links to all items in the carousel without JavaScript. It's a bad before-JavaScript experience because the content jumps around as it rearranges itself into a carousel. You can see this at the 15s and 22s marks.

Progressively-enhancing a carousel

You might be more familiar with the term "hydration" if you use a framework, but the goal is the same: Give the user a reasonable before-JavaScript experience, then add in JavaScript interactivity with as little disruption as possible.

CSS scroll snap has really good support these days, which lets you create a carousel-like experience without JavaScript. It also means the carousel will use platform scroll physics. You could use some tricks to hide the scrollbar at this phase, which is fine as a before-JS experience, but maybe not great as a no-JS experience, as it will prevent some users being able to get to the other items in the carousel. If that's an issue, you could branch behaviour for no-JS users at this point.

The before-JS render should contain the images too. This means the browser can download them in parallel with the JavaScript. Right now the main image on the Haas site is in the carousel, and its download is delayed because the browser doesn't know about it until after the JavaScript loads. Also, putting the core carousel content in the HTML means you're less likely to get a layout shift when it's enhanced.

The before-JS phase should not contain the back & forward buttons of the carousel, because they won't work. This is something a lot of frameworks get wrong when it comes to hydration; they encourage the before-JS render to be identical to the enhanced version, but buttons that don't work are a bad user experience.

Then, JS comes along and adds the back & forward buttons, which use scrollTo. Adding these buttons shouldn't impact layout of other things, they should just appear.

Edit: Turns out such a carousel already exists. Here's the repo.

Issue: Image optimisation

Let's take that main image at the top of the page:

The original is 1440 pixels across, which is too big for a mobile device. I resized it to 720 pixels.

How fast could it be?

With the CSS inlined, and a better before-JavaScript render, here's how fast it would be:

- Original

- Optimised

There's still some instability there as the font comes in. I would tackle that by making the fallback font have similar dimensions using the CSS font loading API.

Scoreboard

| Score | vs 2019 | |||

|---|---|---|---|---|

Red Bull | 8.6 | -7.2 | Leader | |

Aston Martin | 8.9 | -75.3 | +0.3 | |

Williams | 11.1 | -3.0 | +2.5 | |

Alpha Tauri | 22.1 | +9.3 | +13.5 | |

Alfa Romeo | 23.4 | +3.3 | +14.8 | |

Haas | 28.2 | +15.7 | +19.6 | |

Ferrari | 52.8 | +6.7 | +44.2 |

Haas slot in ahead of Ferrari. It's sad to see 2019's winner regressing so much, but the problems don't seem too hard to fix.

- Part 1: Methodology & Alpha Tauri

- Part 2: Alfa Romeo

- Part 3: Red Bull

- Part 4: Williams

- Part 5: Aston Martin

- Part 6: Ferrari

- ➡️ Part 7: Haas

- Part 8: McLaren

- Bonus: Google I/O

- …more coming soon…