Performance-testing the Google I/O site

I've been looking at the performance of F1 websites recently, but before I dig into the last couple of teams, I figured I'd look a little closer to home, and dig into the Google I/O website.

- Part 1: Methodology & Alpha Tauri

- Part 2: Alfa Romeo

- Part 3: Red Bull

- Part 4: Williams

- Part 5: Aston Martin

- Part 6: Ferrari

- Part 7: Haas

- Part 8: McLaren

- ➡️ Bonus: Google I/O

- …more coming soon…

At Google I/O our little team is doing an AMA session. We (along with others) built Squoosh.app, PROXX, Tooling.Report, the Chrome Dev Summit website, and we'd love to answer your questions on any of those sites, related technologies, web performance, web standards, etc etc.

We'll be gathering questions closer to the event, but in the meantime, let's take a look at the performance of our event page.

In case you haven't been following the series, I'm going to see how it performs over a 3G connection, on a low-end phone. I covered the reasoning and other details around the scoring in part 1.

Anyway, on with the show:

Google I/O event pages

- Link

- First run

-

26.3s (raw results)

- Second run

-

12.9s (raw results)

- Total

-

39.2s

There's nothing for around 9.5s, then a spinner. But, as I said when we were digging into the Alfa Romeo site, a loading spinner doesn't count as a first content render, it's just an apology for being slow 😀. The content arrives at the 26.3s mark.

It isn't a great result given that the core content is a session title, description, and a few avatars. So, what's going on? In previous parts of the F1 series I've broken the problem down into the various things that have hurt the page's performance, but in this case it's more of a fundamental architecture issue. Let's step through it…

What's happening?

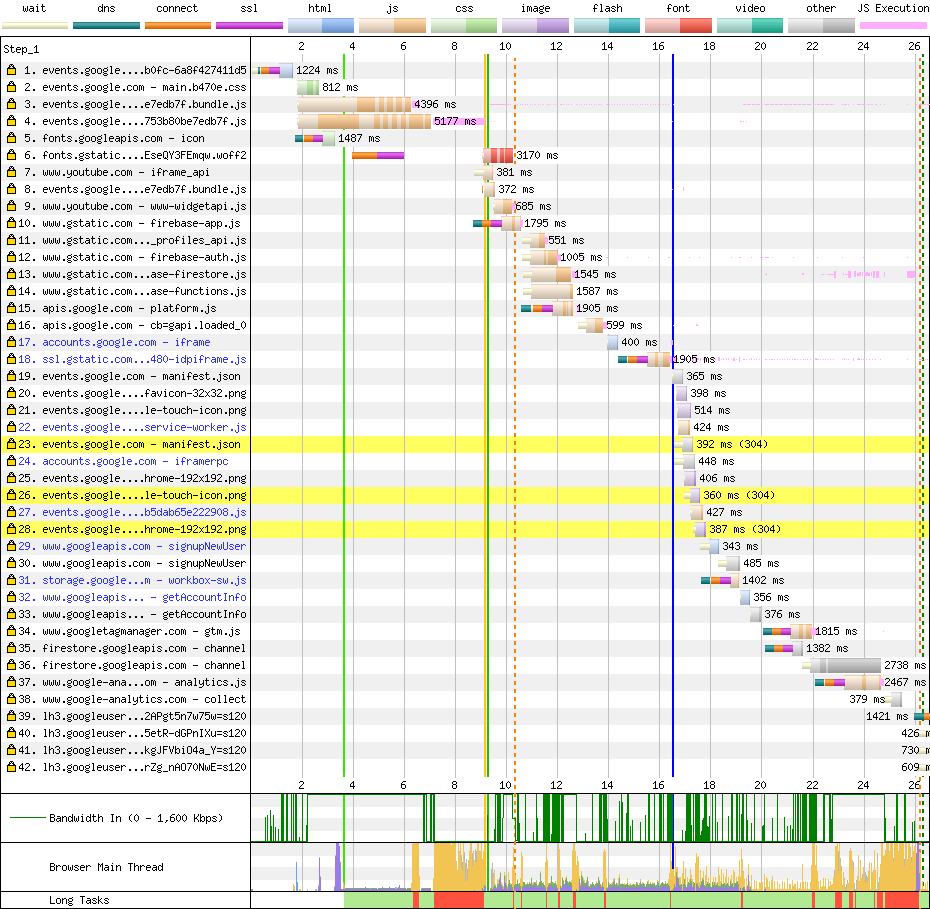

Here's the waterfall:

I don't have any behind-the-scenes insight into this site, and I wasn't part of the team that built it, so all of the analysis I present here is just from looking at the site through DevTools and WebPageTest.

The source of the page is pretty empty, so what is-and-isn't a render-blocking resource doesn't really matter since there's no content to block. In this case, the content is added later with JavaScript.

Rows 3 and 4 are the main JavaScript resources included in the HTML of the page. It's ~750kB on the wire, which is a lot considering the core content is a title and a paragraph. But that's gzipped. Once it's unzipped, it's 3.2MB of JavaScript for the browser to parse.

You can see the impact of this on the "browser main thread" part of the waterfall around the 7-9s mark, it locks the browser up for a couple of seconds.

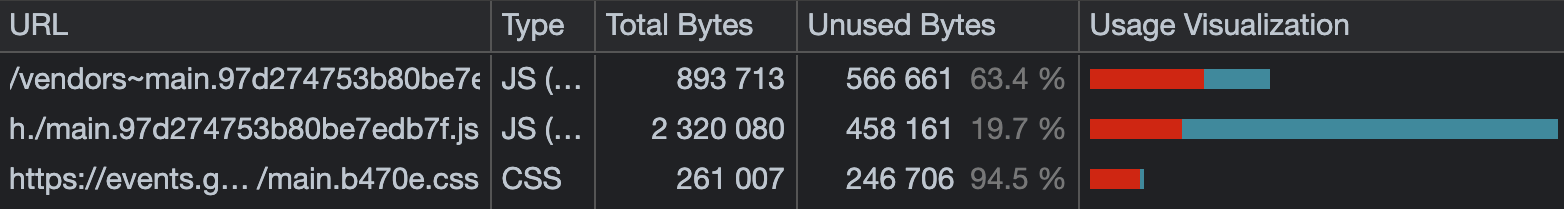

Here's Chrome DevTools' coverage analysis:

This suggests that over 2MB of JavaScript was 'used' here, but that doesn't mean it's necessary. Again, we're talking about a title and a paragraph; we know that doesn't need 2MB of JavaScript to render.

In fact, the large amount of coverage here is a red flag. It means that the JavaScript isn't just being parsed, a lot of it is executing too.

Looking at the source of the script, around half of it looks like Bodymovin instructions. Bodymovin is JSON that expresses animations exported from Adobe After Effects. The page we're looking at doesn't feature any of these animations, so it's all wasted time and effort. It's fine to download things that might be needed later, but in this case it's delaying things that are needed now.

Also in the source is a load of text content for other pages, in a multitude of languages. Again, this is a waste of time in terms of performance. The user's preferred language is sent as an HTTP header, so the server can respond with content tailored for that user. Static hosts such as Netlify support this too, so you can make use of this even if your site is static.

We looked at code splitting in Tooling.Report, which is well supported in modern bundlers. These huge scripts need to be split so that each page type has its own entry point, meaning leaf pages like this aren't downloading and processing heavy animations aimed at other pages.

But, the main problem is that the content is dependent on JavaScript. A session title and a description shouldn't require JavaScript to render.

Ok, we're now 9.5s through the page load:

This is the point where we get the spinner. Back to the waterfall:

The JavaScript loaded in the previous step then loads Firebase JavaScript in row 10. This script is on another server, so we pay the price of another HTTP connection. I covered this in detail in part 1. The performance damage here could be recovered a little using <link rel="preload">, but ultimately the real solution is to include the core content in HTML, and avoid blocking it with JavaScript.

That Firebase JavaScript loads more Firebase JavaScript (rows 11-15), and time is lost by loading these in series rather than in parallel. Again, this could be recovered a little with <link rel="preload">.

The JavaScript in row 15 appears to be related to getting login info. It's on yet another server, so we pay another connection cost. It goes on to request more JavaScript in row 16, which loads an iframe in row 17, which loads a script (on yet another server) in row 18.

We started loading script at the 2s mark, and we're still loading it at the 16s mark. And we're not done yet.

Rows 29, 30, 32, 33 are more requests relating to login info (in this test the user is logged out), and they're happening in series.

Now we're at the 20s mark, and it's time to fetch the data for the page. A request is made to Firestore in row 35, which is on yet another server, so we pay yet another connection cost. A <link rel="preconnect"> or even <link rel="preload"> could have helped a bit here.

That request tells the script to make another request, which is row 36. This is a 3MB JSON resource containing information about every session and speaker at Google I/O. Parsing and querying this hits the main thread hard, locking the device up for a couple of seconds.

To make matters worse, this resource is not cacheable. Once you're on the site, all navigations are SPA, but future visits to the site involve downloading and processing that 3MB JSON again, even if you're just wanting to get the time for a single session. Unfortunately, because of the sheer amount of data to query, even the SPA navigations are sluggish on a high-end MacBook.

But that brings us to the 26s mark, where the user can now see the title of the session.

What would have been faster?

Imagine you went to a restaurant, took a seat, and 20 minutes later you still haven't been given a menu. You ask where it is, and you're told "oh, we're currently cooking you everything you might possibly ask for. Then we'll give you the menu, you'll pick something, and we'll be able to give you it instantly, because it'll all be ready". This sounds like a silly way to do things, but it's the strategy the I/O site is using.

Instead, sites should focus on the delivering the first interaction first, which in this case is telling the user the title, time, and description of the session. This is easy to do with just HTML and a bit of CSS. It doesn't need JavaScript. It certainly doesn't need 8MB of JavaScript and JSON.

Also, the I/O site makes good use of logged in state, allowing users to mark which sessions they're interested in, however the authentication method is massively hurting performance with a long string of HTTP requests. I don't have experience with Firebase Auth, so I don't know if it's being used properly here, but I know when I built Big Web Quiz I was able to get auth info in a single request.

However, Big Web Quiz had its own server, so that might not be possible for the I/O site. If that's the case, the I/O site should render with 'unknown' login state, and the login specific details can load in the background and update the page when it's ready, without blocking things like session info.

Anything else?

One relatively minor thing:

Row 6 is an icon font. Icon fonts are bad for the same reasons sprite sheets are bad, and I dug into those on the McLaren site.

In this case, it only seems to be used for the hamburger icon in the top-left of the page. So the user downloads a 50kB font to display something that's 125 bytes as an SVG.

How fast could it be?

In other posts in this series I put the site through a script to pull out the JavaScript and render the page as HTML and CSS, but that's just because I don't have time to fully rewrite the sites. However, in this case we can compare it to a Chrome Dev Summit session page, which has exactly the same type of core content:

- Google I/O

- Chrome Dev Summit

The Chrome Dev Summit site renders session data in two seconds on the same connection & device. The second load is under one second as the site is served offline-first using a service worker.

Scoreboard

| Score | vs 2019 | |||

|---|---|---|---|---|

Red Bull | 8.6 | -7.2 | Leader | |

Aston Martin | 8.9 | -75.3 | +0.3 | |

Williams | 11.1 | -3.0 | +2.5 | |

Alpha Tauri | 22.1 | +9.3 | +13.5 | |

Alfa Romeo | 23.4 | +3.3 | +14.8 | |

Haas | 28.2 | +15.7 | +19.6 | |

McLaren | 36.0 | -4.7 | +27.4 | |

Google I/O | 39.2 | n/a | +30.6 | |

Ferrari | 52.8 | +6.7 | +44.2 |

Unfortunately the Google I/O site ends up towards the back compared to the F1 sites.

- Part 1: Methodology & Alpha Tauri

- Part 2: Alfa Romeo

- Part 3: Red Bull

- Part 4: Williams

- Part 5: Aston Martin

- Part 6: Ferrari

- Part 7: Haas

- Part 8: McLaren

- ➡️ Bonus: Google I/O

- …more coming soon…